What does a Simpson classifier see?

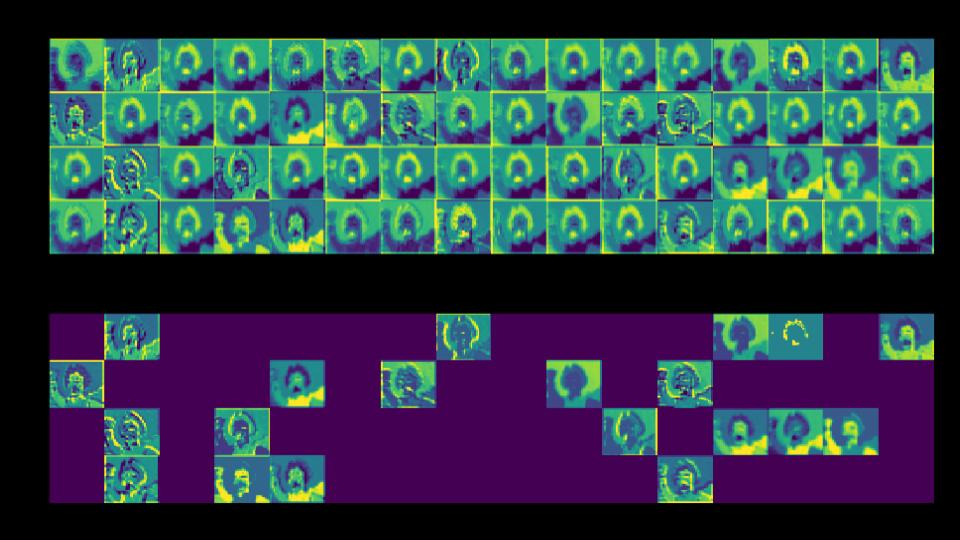

There’s been renewed debate on the relevance of modern deep learning approaches to neuroscience & biology. Because I study memory and remembering often invokes mental imagery, I’ve become increasingly interested in what neural networks “see”. To have some fun while learning, I used images from The Simpsons to build a neural network that labels characters with reasonable accuracy (77%). Here’s a few images I used:

I took an existing architecture (‘little VGG’) and re-trained it on a dataset of Simpsons images. To get an idea of what it was “seeing”, I fed the image of Homer above (from the fantastic episode ‘Lisa the Iconoclast’) through the network and visualized a few layers.

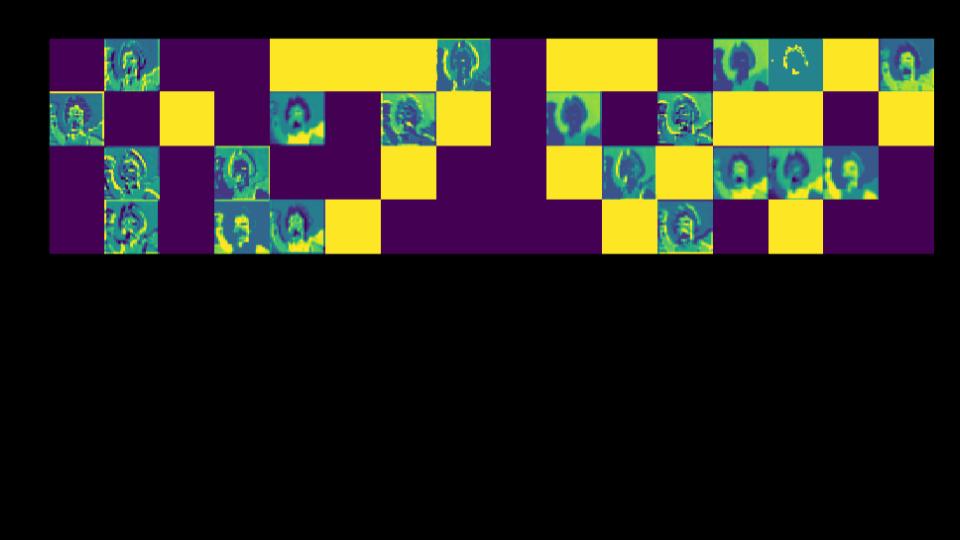

The early layers of the network retain a lot of the properties of the original image. Here’s the first two layers:

Even in the third layer of the network there’s some homer-esque images that are still recognizable:

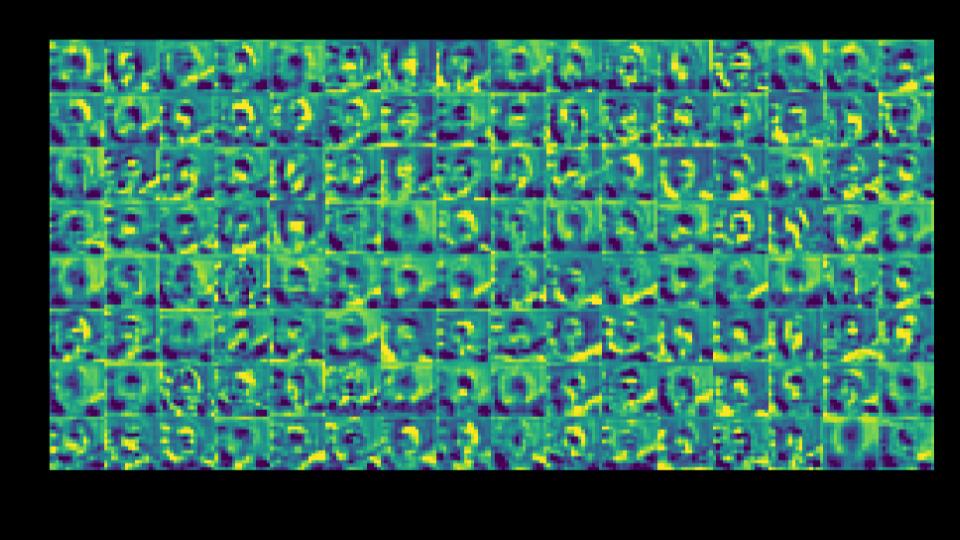

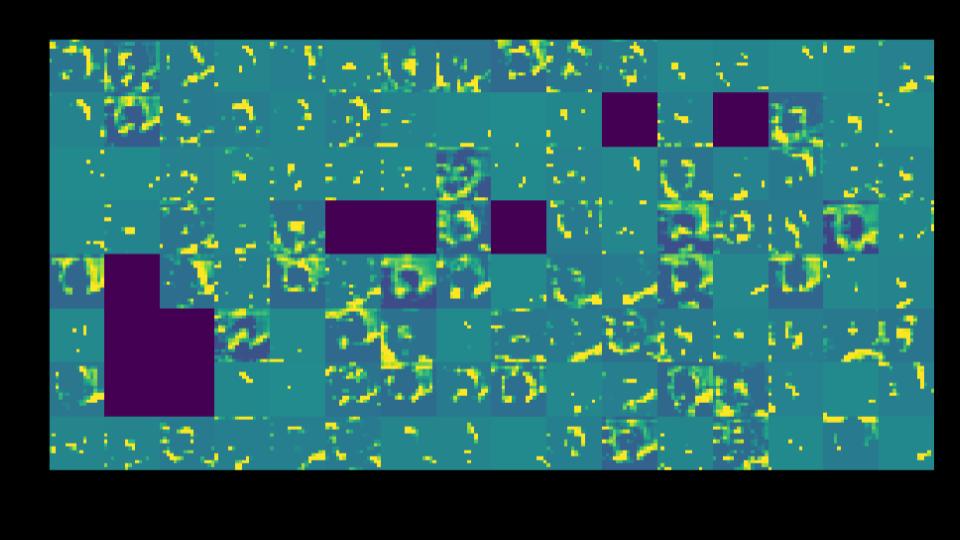

As you move into the ‘deeper’ layers, things start to get…weird.  |

|

How do layers of a neural network like the one above map onto the brain? Stay tuned! Head to my github for code used in the above examples.

Mason Price lives in the PNW and completed his Ph.D. at the University of Missouri. He also holds a B.A. in Political Science.